Introduction

What Are Sound Waves?

Sound is an essential part of human experience, shaping communication, music, entertainment, and even our emotions. At its most fundamental level, sound is a type of wave that travels through a medium such as air, water, or solid materials. When an object vibrates, it disturbs nearby particles, creating alternating regions of compression and rarefaction that propagate as sound waves. These waves move at different speeds depending on the medium—faster in solids, slower in gases—explaining why sound behaves differently underwater or in open air. The frequency of a sound wave determines its pitch, with higher frequencies producing higher-pitched sounds, like a whistle, and lower frequencies creating deeper tones, like a drum. Amplitude, on the other hand, determines loudness—greater amplitudes result in louder sounds, while smaller amplitudes create softer tones. Every sound we hear is a combination of multiple frequencies, harmonics, and intensities, which together give sounds their distinct characteristics. This is why a piano and a guitar playing the same note still sound different—a property known as timbre. Sound waves can be classified into different types based on their characteristics, such as mechanical vs. electromagnetic waves or longitudinal vs. transverse waves, with most audible sounds being longitudinal mechanical waves. Humans typically hear sounds within the range of 20 Hz to 20,000 Hz, but many animals, such as bats and dolphins, perceive frequencies far beyond this range. The study of sound waves is fundamental to fields such as acoustics, audio engineering, speech processing, and music production, making it one of the most widely explored areas of science and technology.

Why Sound Matters

Sound is much more than just a physical phenomenon—it plays a crucial role in health, communication, technology, and even mental well-being. From the moment we wake up to the sounds of an alarm clock to the music we listen to while relaxing, sound impacts nearly every aspect of our lives. Certain sounds can trigger emotions—upbeat music can energize us, while slow, soothing melodies can help us relax. This emotional impact is why sound is extensively used in therapy, marketing, and entertainment industries to influence human behavior. Beyond emotions, sound plays a vital role in health and medical research. For instance, doctors use ultrasound waves to examine internal organs, detect anomalies, and monitor fetal development during pregnancy. In neuroscience, researchers study how sound stimuli can help treat conditions like tinnitus (ringing in the ears), speech disorders, and even Alzheimer’s disease. Sound also affects productivity and focus—studies have shown that background noise, such as white noise or nature sounds, can enhance concentration by masking distracting noises. In the field of technology, sound processing is essential for speech recognition (Siri, Alexa), noise cancellation, and even biometric authentication through voice recognition. Environmental sound monitoring is another area where sound analysis is valuable, helping researchers track deforestation, ocean noise pollution, and endangered species through their vocalizations. Whether it's for entertainment, health, or security, sound is an incredibly powerful tool that continues to shape modern life in ways we often take for granted.

Who Benefits from Sound Analysis?

The study and analysis of sound waves have far-reaching applications that benefit various industries, researchers, and even everyday users. Musicians and audio engineers rely on sound analysis to enhance the quality of music production, ensuring that different frequencies blend harmoniously for the best listening experience. Film and game developers use advanced sound processing to create immersive audio environments, where every footstep, explosion, or whisper is fine-tuned to match the scene. In healthcare, doctors and medical researchers leverage sound analysis to diagnose conditions such as abnormal heart rhythms, respiratory issues, or even speech disorders by analyzing vocal patterns. Speech recognition technology, used in voice assistants like Siri, Alexa, and Google Assistant, relies heavily on sound wave analysis to interpret spoken commands and convert them into text. In environmental science, monitoring natural soundscapes helps researchers track wildlife activity, detect illegal deforestation, and even measure the health of ecosystems through bioacoustic studies. Law enforcement and security agencies use forensic audio analysis to identify voices, detect tampered recordings, or even recognize gunshots from audio surveillance. Manufacturing industries utilize acoustic monitoring to detect mechanical faults in machines by analyzing sound vibrations, allowing for predictive maintenance and reduced downtime. Psychologists and neuroscientists explore how sound stimuli affect human behavior, memory, and cognition, leading to advancements in music therapy and mental health treatment. Even in space exploration, sound analysis plays a role—while sound does not travel in the vacuum of space, analyzing audio waves within spacecraft systems helps detect malfunctions before they become critical issues. With applications spanning so many disciplines, sound analysis is a vital field that continuously evolves with new technological advancements.

Existing Approaches and Their Limitations

Existing work in sound analysis encompasses diverse methodologies from traditional signal processing to advanced machine learning techniques. Fourier transforms and wavelet analysis have long been standard tools for decomposing sounds into frequency components, while more recent deep learning approaches like convolutional neural networks excel at complex pattern recognition in audio. Feature extraction methods, including the spectral and MFCC features identified in the association rules, form the foundation of many audio classification systems. However, these approaches face significant limitations. Current methods often struggle with background noise interference, making real-world applications challenging in unpredictable acoustic environments. Many techniques require substantial labeled training data, limiting their applicability to underrepresented sound categories. Association Rule Mining, while providing interpretable insights into feature relationships as demonstrated by the strong correlations between spectral features, lacks temporal modeling capabilities crucial for analyzing dynamic sounds. Additionally, most current approaches treat audio features independently rather than capturing their complex interdependencies, as revealed by the high-lift associations in complex feature combinations. Furthermore, computational efficiency remains a challenge, particularly for resource-constrained devices, and cross-domain generalization often fails when systems trained on one type of audio are applied to different acoustic contexts. Addressing these limitations requires interdisciplinary approaches that combine signal processing expertise with advanced machine learning while maintaining interpretability.

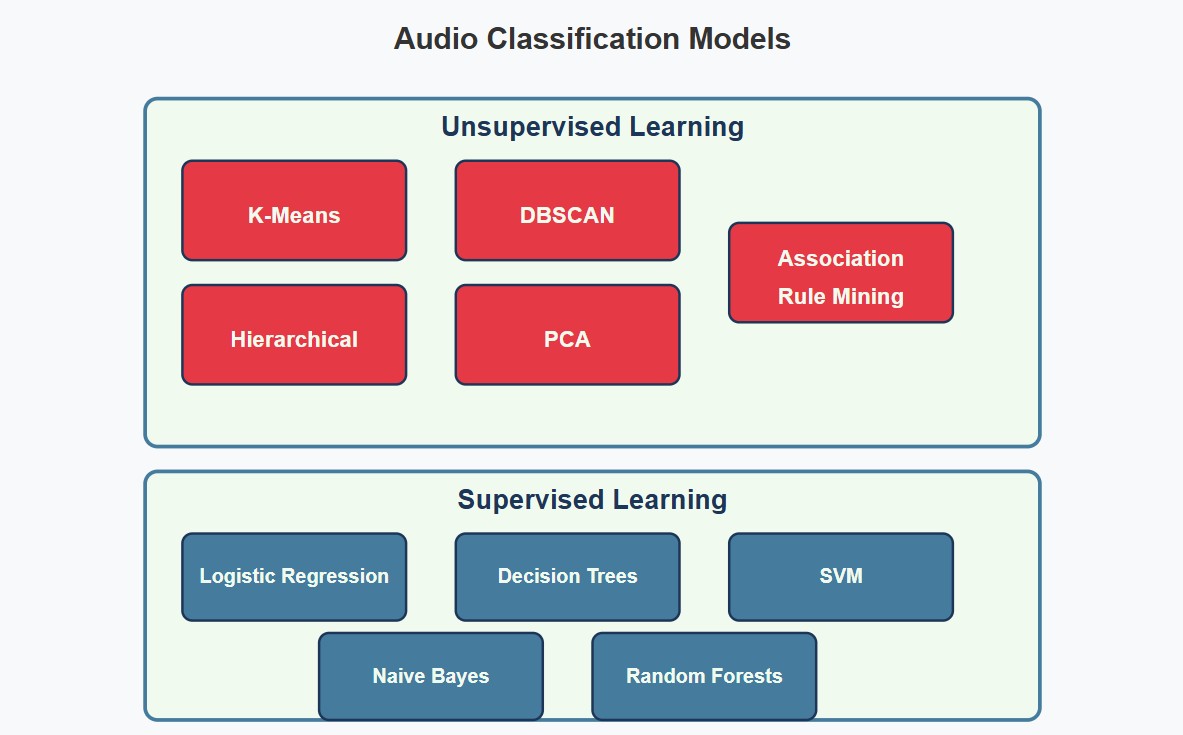

Project Scope and Models Implemented

This project focuses on analyzing and classifying different types of sounds by extracting and leveraging meaningful audio features. Beginning with metadata collection and audio downloads from the Freesound API, the dataset was built by curating a wide range of sound categories. Preprocessing steps standardized the dataset by converting all audio files to a uniform length and sample rate, ensuring consistency across categories. Feature extraction techniques were then applied to compute a diverse set of properties, including MFCCs, chroma vectors, spectral contrast, zero-crossing rates, energy measurements, and tonal features. Principal Component Analysis (PCA) was performed to reduce the high-dimensional feature space and identify dominant patterns in the dataset. Unsupervised clustering algorithms such as K-Means, Hierarchical Clustering, and DBSCAN were used to group sounds based on their feature similarities, with evaluation metrics like silhouette scores guiding parameter choices. Association Rule Mining (ARM) was applied to uncover strong, interpretable relationships between different audio features, revealing how certain spectral and temporal characteristics co-occur within categories. Supervised machine learning models—including Logistic Regression, Decision Trees, multiple Naive Bayes variants, Support Vector Machines, and Random Forests—were trained to classify sounds into their respective categories. Model performance was evaluated using confusion matrices, classification report heatmaps, ROC curves, and decision boundary plots to understand the strengths and weaknesses of each approach. Throughout the project, careful attention was paid to feature behavior across categories, such as how chroma features, spectral energy, and zero-crossing rates varied and contributed to the final classification performance. By integrating signal processing, machine learning, and visualization, the project provides a comprehensive exploration of how different types of sounds can be systematically characterized and predicted.

Research Questions

Through this project, the following research questions were explored:

- Can different types of sounds be classified effectively using machine learning models based on spectral, chroma, MFCC, and temporal features?

- Which features among MFCCs, spectral contrast, chroma features, and others contribute the most to distinguishing between sound categories?

- How do sound categories differ in their frequency and energy distributions, and can these differences aid in classification?

- Is it possible to distinguish between natural sounds (e.g., rain, birds) and artificial sounds (e.g., sirens, footsteps) using extracted audio features?

- How does the zero-crossing rate vary across different categories, and does it serve as a useful feature for classification?

- Which sound types exhibit the most variability in their MFCCs, spectral contrast, and tonal features?

- How effectively can clustering algorithms (K-Means, Hierarchical, DBSCAN) group sounds based on principal components of extracted features?

- Can association rule mining uncover strong, interpretable relationships between specific audio features and sound categories?

- How do chroma features vary across different sounds, and can chroma-based patterns enhance classification performance?

- What are the most distinguishing spectral and temporal characteristics between similar-sounding categories, and how can models leverage them?